Can AI Really Moderate Content? The Truth Behind the Tech

Content moderation using AI revolutionizes online safety with real-time analysis, transforming digital safety with speed, scale, and precision. Read more.

The internet is drowning in content, now more than ever.

Every day, over 350 million photos are uploaded to Facebook. 350 million. Every day. That’s 14.5 million photos per hour, or 243,056 every minute.

And that’s just Facebook.

Toss in Instagram, X, TikTok, LinkedIn, and every other social media platform… The overall social upload numbers are truly unfathomable.

The infinite amount of content inevitably paves the way to a harsh truth: not all content is harmless selfies or cat memes. A chunk of it is pure poison: hate-filled rants, scams, or unhinged garbage that can torch a brand’s reputation in a heartbeat.

One viral post calling out your company with venom? Good luck shaking that off. One scam slipping through the cracks? Say goodbye to user trust.

To get through this content manually, it would take 10s of thousands of people working round the clock. And even then, there's a high likelihood that some will be missed.

5 Different Ways Artificial Intelligence Polices Online Content

Not all AI moderation systems work the same.

Some catch problems before users see them, and others rely on digital communities to flag issues. Each approach trades safety for user experience in different ways.

Let's break down how each works, what they're good at, and where they fall short.

Pre-Moderation

With Pre-Moderation, nothing goes live until AI checks it first.

Every image, comment, and video sits in a queue waiting for AI approval.

This works great for kid-focused platforms where safety trumps everything else. (Think educational apps or child-friendly games.)

The downside is that everything slows down. Users post something and wait... and wait.

Real conversations, in such types of moderation, may become impossible when comments take minutes to appear.

Post-Moderation

Content goes live immediately, but AI swoops in seconds later to sniff out trouble. Users get instant gratification while platforms maintain safety guardrails.

This balances user experience with protection. Facebook, Instagram, and X use the Post-Moderation approach to handle billions of posts.

The risk? Harmful content might appear briefly before removal. A few seconds is all it takes for some troll to screenshot and spread the chaos.

With this approach, the risk increases as more platforms look to push the onus onto the users, as is already the case with X and the direction Meta platforms are moving to.

Reactive Moderation

With Reactive Moderation, your users are the first ones swinging.

They spot something shady (hate spam, aggression, whatever is toxic) and flag it for AI to check. It’s lean and mean, great for scrappy platforms with tight budgets.

Reddit ran this playbook in its early days before upgrading. The downside’s obvious: the nasty stuff sits there, stinking up the place, until someone yells “hey, this sucks!” Before that, your community is stuck staring at it first.

Distributed Moderation

The crowd decides what stays and what goes.

AI tallies user votes to determine if content violates standards. This creates community ownership in platform rules and users feel invested in maintaining quality.

However, platforms need to watch out for coordinated voting campaigns. Groups can manipulate these systems to remove legitimate content they dislike.

Hybrid Moderation

Hybrid moderation combines AI screening with human review for tricky cases.

AI handles obvious violations while humans tackle nuanced decisions. This provides the most complete protection without sacrificing efficiency.

The challenge? You need clear guidelines on what AI handles versus what needs human eyes. Without those boundaries, you'll waste resources or miss violations.

Tired of playing content cop? Let AI or Not’s advanced detection do the heavy lifting.

Start checking online content!

How Does AI Content Moderation Work? (Step-by-Step)

Here’s a step-by-step process on how AI moderation gets it done.

Step 1: Content Collection

It all starts when someone uploads text, images, videos, or audio to your platform.

Every meme, comment, and profile pic gets funneled into the system. Your platform collects this content in real-time, often processing thousands of submissions per second.

Step 2: AI Analysis

Now the AI gets to work, using different tools for different content types. Text goes through language processors that read beyond just bad words. Images run through vision systems that spot everything from nudity to violence. Audio gets transcribed and analyzed for threats or harassment.

Step 3: Pattern Recognition

The AI compares what it sees against known patterns of problematic stuff. Is this profile pic similar to known fake accounts? Does this comment match patterns of hate speech? The system assigns risk scores based on these matches.

Step 4: Decision Engine

Based on those risk scores, the AI makes a call. Green light? Content goes live instantly. Red light? It gets blocked. Yellow light? A human gets called in because the AI isn't 100% sure. All this happens in milliseconds.

Step 5: Feedback Loop

This is where humans make AI smarter. When moderators review flagged content, their decisions teach the system. Was that meme actually harmless? The AI takes notes for next time. The system gets better with every piece of content it processes.

Does it really work, though? Facebook's AI now catches 95% of hate speech before users report it. Five years ago? That number was below 40%.

Why Smart Businesses Use AI for Moderation: The Benefits

Smart companies aren't wasting human hours on tasks machines handle better. Today, AI doesn't just help with moderation—it transforms it completely.

Here's how:

✅ Speed: Processes millions of content pieces in seconds (not days or weeks)

✅ Scale: Handles growing content volume without adding more human reviewers

✅ Consistency: Applies the same rules every time without human fatigue or bias

✅ Cost-efficiency: Reduces the need for large moderation teams while improving coverage

✅ 24/7 Operation: Works around the clock without breaks

Stop drowning in AI fraud. AI or Not scans everything—images, audio, videos—in real-time.

Use Cases Where AI Moderation Shines

Here are some sectors and industries that are really benefiting from AI-based content moderation:

E-commerce: Preventing fake reviews, scams, and inappropriate product listings

Social Media: Filtering harmful content, hate speech, and misinformation

Online Communities: Maintaining constructive discussion environments

Gaming: Monitoring in-game chat for harassment and abuse

Educational Platforms: Creating safe learning environments for all ages

Dating Apps: Preventing harassment, scams, and inappropriate imagery

Government Forums: Ensuring productive civic discourse

Art Marketplaces: Protects against AI art being sold as real

Brutal Challenges AI-Led Content Moderation Still Faces

While AI moderation tools have come a long way, they're still fighting with one hand tied behind their back. The tech works great until it doesn't—and those failures can seriously damage your brand reputation overnight.

AI is Increasing Volume.. But Helps Automation

AI is making it easier to both create AND spot fake content.

Today's generative models can produce fake videos that look eerily real.

These AI systems pump out harmful content faster than humans can review it. A single bad actor with basic tech skills can flood your platform with thousands of violations hourly.

The same tech making these fakes can help catch them. AI detection tools like AI or Not can instantly flag synthetic images and audio.

These platforms now run content through detection systems before it goes live. It's like having AI check for clues left behind by its generative counterparts.

The "Free Speech" Tightrope Walk

Perfect content moderation simply doesn't exist.

Block too much and users scream censorship. Block too little and you're suddenly hosting harmful content.

Furthermore, what's acceptable in the US might be illegal in Germany or even blasphemous in Saudi Arabia.

And, should you have different rules for different places? Users want both total protection and complete freedom simultaneously.

So, flawless moderation might be an impossible task.

But, platforms like AI or Not can balance this critical situation by building transparency into their systems. Explain why the content was removed and offer appeals processes and admit when you're wrong.

Because in 2025, trust matters as much as speed.

Human-AI Dream Team: Creating An Efficient Content Moderation System

The answer isn't choosing between AI or humans – it's building a smart partnership between both.

AI handles the heavy lifting by scanning millions of posts instantaneously. It catches obvious violations like nudity or spam in milliseconds. Meanwhile, your human team tackles the tricky stuff AI misses – like sarcasm, cultural references, or coded language that flies under the algorithmic radar.

The magic happens in the tiered system. AI automatically approves safe content and blocks obvious violations. The borderline cases? Those go to human experts who understand context and nuance. Facebook does this brilliantly with 15,000+ human moderators reviewing only what their AI flags as suspicious.

When moderators mark something as harmful that AI missed, the system learns. This feedback loop makes your AI smarter each day without requiring constant reprogramming.

What about AI-generated content? Some of it might seem perfectly fine, like a movie star not feeling well or an influencer sharing their opinion on a hot topic. But flagging as AI can escalate it to human review before it goes live to the masses. Or labels it as such and passes it to user moderators to determine if it's ok or not.

The bottom line? AI handles volume. Humans handle judgment. Together, they create the safest possible online space.

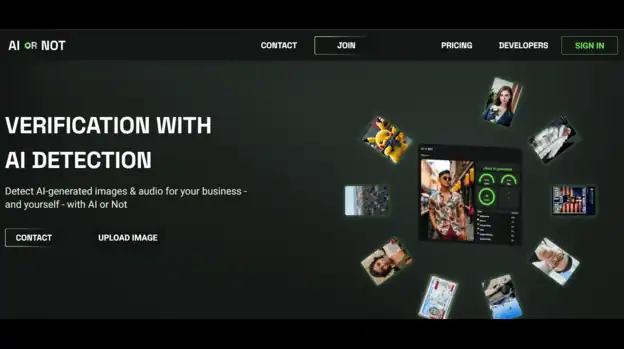

AI or Not: Effectively Fighting AI-Generated Misinformation

AI-generated fakes are flooding moderation queues faster than humans can spot them. Your team is drowning in content while scammers get better at tricks.

AI or Not cuts through this mess with tech that fights fire with fire.

Our system spots AI fakes in seconds—not hours or days when damage is already done.

But, what makes AI or Not different from other solutions? It's built for speed and accuracy without the privacy headaches:

Real-time detection: Spots AI-generated images, audio, and deepfakes within seconds

Enterprise-grade accuracy: Delivers 98%+ accuracy across all content types

Privacy-focused: Deletes all client data immediately after analysis—zero exceptions

Integration options: Plugs directly into your existing systems through simple API calls

Comprehensive coverage: Catches content from Midjourney, DALL-E, Stable Diffusion, and more.

Next Steps in Getting Started With AI-Based Content Moderation

Step 1: Define what content violates your community standards. Be specific about AI fakes

Step 2: Choose either AI or Not’s web platform or API (based on your volume needs)

Step 3: Start with AI screening plus human review to build trust in the system

Step 4: Create a simple appeal process for users who believe content was wrongly flagged

Step 5: Stay current with our monthly updates as new AI generation methods emerge.

Want to see how it works? Upload a suspicious image right now and watch our system get to work, instantly.

The Future of AI for Content Moderation: The Latest Trends

AI-based content moderation is rapidly evolving, driven by these key trends:

Human-in-the-Loop (HITL):

Companies increasingly combine AI with human oversight. AI handles clear-cut cases, while humans tackle nuanced decisions requiring judgment. This reduces false positives and negatives, improving overall moderation accuracy.

Multi-modal analysis:

Platforms now integrate text, audio, and visual data into unified moderation models. By analyzing multiple signals simultaneously, these systems better understand context and intent, reducing misinterpretation risks.

Real-time learning:

AI systems now learn in real-time, adapting to new threats without needing full retraining. This capability is vital in staying ahead of evolving AI-generated content, such as deepfakes and synthetic media.

Explainable AI (XAI):

Transparency is critical. New moderation tools clearly explain their decisions, providing detailed reasoning behind why content is flagged or approved. This transparency builds trust among users and regulators.

Edge computing: Content moderation using AI is moving onto user devices directly. On-device analysis reduces latency and privacy concerns since data doesn't leave the user's device.

Domain-specific models:

Specialized AI models tailored to specific industries—like finance or healthcare—understand industry-specific language and contexts. These specialized models significantly improve moderation accuracy for niche content.

Finding the Right Balance Between AI and Content Moderation

In the end, content moderation isn't optional anymore. Toxic content is getting pumped into your platform faster than humans can ever catch it.

AI doesn't just help—it's the only realistic solution combined with human efforts. Without it? Your platform could drown in a flood of harmful garbage while your moderation team burns out.

AI or Not gives you detection tools that spot AI-generated fakes in seconds. Deepfakes, phony images, or cloned voices - we flag it right away.

You have a reputation to upkeep, stop gambling with your brand’s integrity.

FAQs

How to moderate AI-generated content?

You can moderate AI-generated content with AI-driven detection tools, such as AI or Not. Such tools look for pixel-level inconsistencies and statistical patterns unique to generative models.

Can content moderation be automated?

Yes, but not fully. It is ideal for AI to handle 90% of clear violations while human review should be required for context and edge cases.

What are some best practices for using AI for content moderation?

Start with clear guidelines. Train models on diverse data. Set different thresholds for different content types. Finally, always maintain human oversight.

What are the challenges with moderating user-generated content using AI?

Context is a killer - AI struggles with cultural nuances and humor. Also, false positives frustrate users when they might think nothing is wrong with their post.