Deepfake Technology: What Exactly Is It? (2025 Edition)

Deepfake is no longer just a buzzword but required reading. Learn how deepfakes work and how AI or Not's deepfake detection tool protects against them.

Deepfake Technology: What Exactly Is It? (2025 Edition)

Guardians of the Galaxy was the hot ticket in the theater and John Legend's All of Me was on repeat on the radio. The year was 2014 and it happened to also be the birth of deepfakes with Ian Goodfellow introducing an algorithm called Generative Adversarial Networks (GANs). Though this technology was already created, the term "deepfake" didn't gain popularity until 2017 by a reddit user who started, a now defunct, subreddit channel under the same name. Reddit users swapped videos they created of primarily celebrities who were used to create ahem nsfw inappropriate content, hence the group being banned.

You'd think it was a recent phenomenon with how prevalent deepfakes have become with the growing popularity of generative AI. But its a decade old innovation that has become easier than ever to access. What previously required powerful tools can now be done with a free iPhone app.

As it exciting as that is for innovation enthusiasts, is this technology a net positive or negative for society at large?

What exactly are deepfakes and how do they work?

Deepfakes are synthetic media where a person's likeness is replaced with someone else's through artificial intelligence; or, with generative AI, a synthetic identity created from scratch. What began as rudimentary face-swapping has evolved into technology capable of creating videos and audio that can fool even trained observers. While deepfakes have fun applications in entertainment (and memes!), their potential for spreading misinformation, creating non-consensual intimate content, and undermining trust in digital media has caused concern.

Understanding this technology is no longer an academic exercise reserved for computer science majors but something that everyone has to be aware of and fluent in.

Understanding the core technology behind deepfakes

Deepfakes rely on neural networks called autoencoders and generative adversarial networks (GANs).

An autoencoder functions like a compression-decompression system. When creating a deepfake, the system first trains on thousands of images of two different faces, the source and the target. The encoder part of the network learns to reduce these facial images to their essential features: facial structure, expressions, and distinctive traits. It then extract shared features and swaps facial details while preserving realistic movement and expressions. The decoder then reconstructs the face from this compressed representation.

GANs, the 2014 breakthrough, takes deepfake technology further by introducing two competing neural networks: a generator that creates fake images, and a discriminator that tries to identify real from fake. This face off (no bad time for a Nic Cage reference!) forces continuous improvement as the generator constantly evolves its output to outwit an also improving discriminator.

The evolution of deepfake algorithms since their inception

Fun, and mostly innocent, face-swaps with autoencoders was not fooling anyone quite yet.

Generative Adversarial Networks (GANs), however, produced images and videos with more lifelike expressions and movements.

And most recently, like in the case of Midjourney and DALL-E, diffusion models have become a popular method. These models start with pure noise, or mess, and gradually refine the image to make it photorealistic; like going from an abstract piece of art to an image taken with a DSLR camera. This is done with the following 2 step process:

1. Forward Diffusion (Adding Noise) – The model starts with a real image, likely one already in the model's training data, and progressively adds random noise until the image is completely unrecognizable.

2. Reverse Diffusion (Denoising) – The model learns to reverse this process by predicting and removing the noise step by step, eventually reconstructing a high-quality, realistic image from scratch.

What are some examples of deepfakes that broke the internet?

These same breakthroughs and technology can be used to create memes with friends and also used to spread false information.

Notable deepfake videos that went viral

Brad Pitt scamming a woman for $850,000.

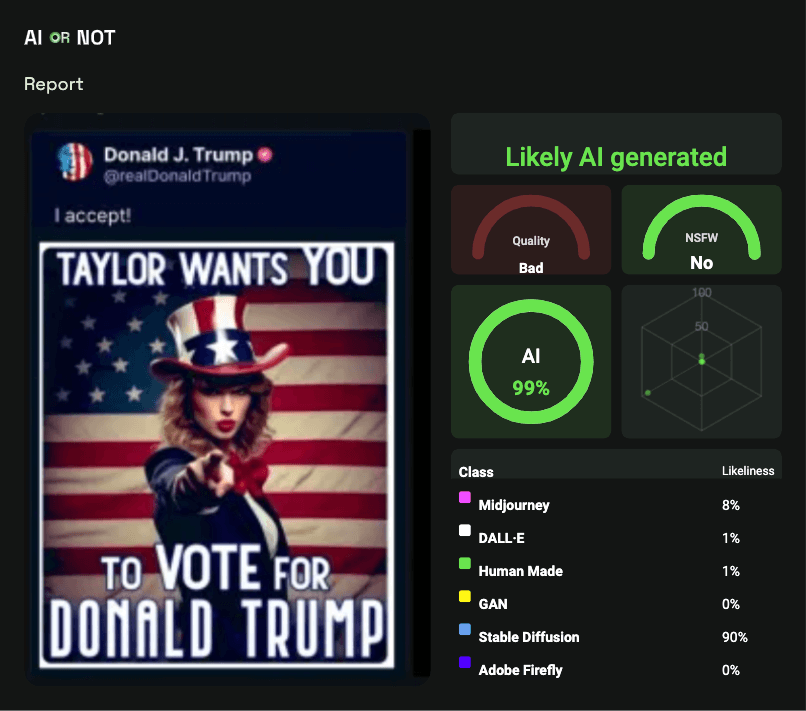

AI generated image of Taylor Swift promoting Donald Trump for President.

The part that is should be most concerning is that the use of this technology no longer requires NASA level machines or a deep understanding of artificial intelligence. These all came from publicly available generators, most of which were even free.

Deepfake audio recordings

Next time you pick up the phone from an unknown number, think twice before speaking too much.

"Who is this?"

"I'm having trouble hearing you, can you repeat that?"

"I'm sorry I think you have the wrong number, that's not me."

That could be all it takes to reassemble your voice to create synthetic deepfake audio as only 15-30 seconds is enough to having your voice saying, well, anything.

Examining the use of deepfakes in entertainment and media

Paul Walker re-appearing in Furious 7. Carrie Fisher materializing as Princess Leia in “Rogue One: A Star Wars Story.” Robert De Niro and Al Pacino were de-aged in the Netflix film “The Irishman.”

Who doesn't want to look younger right?!

But when accents are changed and Oscars are won, as was the case with Adrien Brody's award for the Brutalist, where do we draw the line of these tools used in movies? And should viewers be made aware where AI is used?

Heck, one show's entire premise is detecting deepfake content of your significant other in ahem interesting situations; the Netflix show is called 'Deepfake Love' or 'Falso Amor' in Spanish.

Is the next great producer, like Dr. Dre or Metro Boomin, going to use AI-generated tools right from their bedroom? Maybe the limitation of some incredible singers is that they don't have any beats or music to sing over. But now, with AI and its use in media, they can have a new beat or a new jingle on command. Need a hook for your song? No problem, chatGPT it.

Using deepfake detection tools and software

If you have ever been fooled by facial expressions that were actually fake, its not just you; Elon Musk has even admitted to not being able to tell whats real anymore.

This is exactly why AI or Not was developed: using deep learning and facial recognition, our AI threat intelligence is able to detect deepfakes with industry leading accuracy. AI or Not's AI and ML algorithms recognize the patterns of real photos, audio or video and that of fake videos, audio and photos.

Why are deepfakes considered dangerous in 2025?

Anything that tries to fool people is inherently bad. Deepfake technology and AI-generated content are just the latest tools that bad actors use against people and companies.

What else makes the deepfakes so dangerous in 2025 is their multi-modality nature. We started with text, then came images, followed by audio and video. Combining all of these makes it harder and harder for people and companies to actually see what's real or not. Someone's writing to you personalized one-to-one copy, combining that with images to match, and then sending you audio and followed by deepfake video.

The threat of misinformation and disinformation campaigns

Social media used to be the hub for families to communicate, high school friends to stay connected with each other and for people to have real, genuine engagement. Now social media is awry with bots smashing the comments section and fake misinformation from random accounts. Whether it's people trying to force an issue on to others or nation states banding together fake profiles to push propaganda, they're all using artificial intelligence for these purposes.

Even in the case where a positive message was trying to be shared, it was still a violation of privacy using deepfakes. There was a case where a positive Kanye West video was made that used famous people's faces to dissuade hate speech, but even then, there were concerns about these tools being used inappropriately and without consent.

Implications for cybersecurity and personal privacy

We now may want to think twice before posting that photo or ten of ourselves or our loved ones. With AI-generated technologies, it now only takes that amount of images, less than a dozen, to create deepfake attacks and AI-generated versions of that person. What does this mean for our personal privacy? Should we be monitoring the use of our face across the internet at large?

The ongoing debate: Freedom of expression vs. potential harm

Is a good meme worth the repercussions of trust and public harm? As it, at times, can be funny to put a target's face onto another person's body, biometric security does become needed to help tell whats actually real or not.

When used in movies, it could lead to great entertainment or even sentimental moments. But when criminals with bad intent are creating convincing deepfakes with facial expressions and body parts that look lifelike, people can be harmed in many ways.

This may be a the next issue you hear debated during an election cycle or a nightly news show as the right answer is still unclear.

What does the future hold for deepfake technology beyond 2025?

At the end of 2022, we first experienced the magic of ChatGPT. In 2023, we were generating funny images with AI, 6 fingers and all. In 2024 we were creating beats and catchy songs with AI and starting to experiment with video. What should we expect in 2025?

Predicted advancements in AI-generated media

Deepfake technology and genAI will be multi-modal; text, image, audio, and video content will mesh perfectly together. This convergence will allow creators to produce hyper-realistic, but fake, media that can, for instance, generate entire conversations or elaborate stories. It will also allow for bad actors to do the same when trying to fool someone.

The other great innovation will come from the open source community in AI. For better or worse, tools developed in China, such as Deepseek and QWEN, are leading the charge and developer leaderboards. These open source projects democratize access to advanced AI technology, empowering independent developers and hobbyists alike. However, this same accessibility also means that malicious actors can use these powerful models without safeguards. While ChatGPT will decline certain questions and requests, these models open source are more than happy to oblige.

The race between deepfake creation and detection technologies

Are regulating deepfakes the answer? There is an argument to be made that the same illegal activities such as fraud, scams, KYC impersonations, have already been happening and will continue to happen, just now they are using new tools.

And even if regulations against malicious deepfakes were to be instated worldwide this very moment, it might be too late. The tools to create deepfakes are already widely available and oftentimes, open source. Those same bad actors using technology to create deepfake fraud aren't going to return their tools back to sender if a regulator asks. In fact, post regulation, what they already have downloaded and in use becomes that much more valuable.

That's why AI or Not was created: to detect all forms of deepfakes. Whether its an audio deepfake, or AI generated photo or video, our algorithms have been trained to recognize patterns specifically to identify manipulated content. We will continue to invest in AI-based verification methods that lead the industry in accuracy across every modality.

Potential societal impacts of widespread deepfake use

With the increasing accessibility of multi-modal deepfake technology, everyday users, news outlets, and even political entities may be exposed to an overwhelming volume of fake media. While these advancements offer exciting opportunities for expression and personalized media experiences, they also risk eroding trust in information thats actually real. To combat the dark side, technological solutions, like AI or Not's, are needed to help both people and companies across all of these attack vectors.