What Is Content Moderation In Social Media? The War on Toxic Content

What is content moderation in social media? It is the systematic process of monitoring, filtering, and managing user-generated content on social platforms.

We don’t have to remind you the power and reach of social media. Billions of posts spread out across Facebook, Instagram, X, TikTok… you name it.

Most of those posts are fine, but some are a ticking time bomb: fake reviews, hate rants, and deepfakes that could sink your brand faster than you can say “refresh.”

Think of the impact of a bogus product review slashing your e-commerce sales overnight, or a deepfake of your CEO hawking crypto scams to your customers.

These aren’t sci-fi movie subplots, this is real. In 2023 alone, AI-generated fraud hit businesses for a whopping $10 billion worldwide.

That’s where content moderation comes in, playing defense against all the chaos. It’s the gritty work of keeping social media from turning into a free-for-all dumpster fire.

In today’s guide, we’re ripping apart how it works, why it’s your shield against digital disasters, and how AI or Not can step up to stop fraud before it goes viral.

What is Content Moderation in Social Media, Really?

Content moderation in social media is the systematic process of monitoring, filtering, and managing user-generated content on different platforms. It's essentially digital quality control for the millions of posts uploaded every hour.

Platforms actively review and remove inappropriate content before or after publication to protect users.

Without moderation, social media would become sort of like virtual sewage.

Moderation builds boundaries that keep online spaces respectful and safe for communities. These rules align with platform-specific guidelines and satisfy legal requirements across jurisdictions.

Good moderation protects users while preserving a platform's reputation.

The Importance Of Content Moderation In Social Media

Here are a few reasons why content moderation is important on social media:

Brand protection: Your company logo next to extremist content can kill trust. Customers remember which brands appear beside harmful material.

Legal compliance: Social platforms face different regulations in every country. Effective moderation helps navigate these legal waters without costly penalties.

User retention: People abandon platforms that feel unsafe or toxic. Strong moderation keeps users engaged.

Preventing real-world harm: Online threats can spark offline violence. Moderation breaks this dangerous chain.

Business continuity: One content scandal can trigger advertiser boycotts and stock crashes. Major platforms lost millions when moderation failed.

Moderation isn't just ethical, it could be the difference between failure and financial survival.

How Content Moderation Actually Works

Ever wonder what happens after you report a harmful post? Behind the scenes, there’s a lot of movement:

Content flows through multiple review layers using human moderators, AI systems, and user reports.

Moderators quickly decide if content stays visible, gets age-restricted, or faces complete removal.

(The workforce scale is staggering – Meta employs 15,000 moderators while TikTok has over 40,000)

Decisions follow both local legal requirements and platform-specific community standards.

Moderators typically work against strict quotas, reviewing disturbing material for hours every day.

This invisible workforce processes millions of posts while most users remain completely unaware.

The 4 Main Methods Social Media Platforms Use To Moderate Content

Content moderation isn't a one-size-fits-all solution. Here are the four moderation pillars working to keep social media content (mostly) safe:

1 - AI-Powered Moderation

AI systems have become the front line against problematic content across major platforms. Machine learning algorithms analyze text for hate speech, images for violence, and videos for policy violations all before posts go live.

Pattern recognition software spots threats (like terrorist propaganda or child exploitation material) while natural language processing deciphers slang and coded language.

Real-time processing blocks about 90% of harmful content instantly BUT AI still misses sarcasm. So, a meme mocking extremism, for example, might get flagged as extremist content itself.

While cost-effective for scale, these systems require constant updates to outsmart trolls inventing new evasion tactics.

2 - Human Moderation Teams

Why hire humans if AI’s so smart? Good question.

But context and intent matter. Neither of which AI is great at.

Trained HUMAN professionals are moderators. They can tell the difference between an educational or historical reference, versus harmful intent.

Moderation specialists assess intent, cultural nuances, and humor that algorithms might be misinterpreting.

But there is a flip side: these moderators might be privy to seeing tons of traumatic content daily, Burnout rates soar and human psychology weighs in. Unfortunately, without human input, AI machines wouldn’t learn to distinguish satire from threats.

3 - Community Reporting

Users themselves play a vital role in identifying harmful content. This, in fact, is the direction more social networks are going, such as X and Facebook.

Ever reported a post and wondered if anyone actually checks? You’re part of the system.

User flags prioritize content for review, catching trends like viral scams before algorithms detect them.

Coordinated mass reporting can weaponize the system. Activists might flag legitimate political speech as “harassment,” while others ignore blatant violations.

4 - Hybrid Moderation (AI + Human Review)

The gold standard is a tag-team approach. AI filters obvious violations, humans handle borderline cases, and both learn from each other.

For example, AI blocks clear nudity, while moderators review flagged posts about eating disorders.

This feedback loop sharpens AI accuracy over time. When moderators overturn an AI decision (like approving a breast cancer awareness post mistakenly flagged for nudity) the system adjusts.

The result? Faster scaling with fewer errors.

But the real moderation go-to? AI or Not. Let us right here be your synthetic-media-spotting sidekick. Real-time detection, zero data storage.

Common Content Types That Require Moderation On Social Media

Essentially, every minute, thousands of potentially harmful posts require review to maintain a safe experience for social media users.

These are some specific types of content that demand moderation:

Hate speech and discrimination: Content attacking individuals based on race, religion, gender, or other protected characteristics. This includes slurs, stereotypes, and calls for violence against specific groups.

Graphic violence and explicit material: Visuals showing extreme violence, gore, or sexual content. These materials can traumatize viewers and violate respective social media policies.

Harassment and bullying: Repeated targeting of individuals with threats, insults, or intimidation.

Misinformation and fake news: False claims presented as factual information. This spreads dangerous lies about health, politics, and overall public safety.

Spam and commercial abuse: Unwanted promotional content flooding user feeds. This includes phishing attempts, fake products, and pyramid schemes.

AI-generated fraud: Deepfakes and synthetic media that deceive users. Voice cloning technologies create fake celebrity endorsements or spread false information.

What Are Some Challenges for Content Moderation On Social Media?

If content moderation is so systemic, why do platforms struggle to keep hate speech, scams, or graphic violence from flooding your feed?

Volume & Scale

Billions of posts hit platforms daily. Facebook alone sees 350K+ uploads per minute. Human teams can’t physically review this onslaught of potentially harmful content. Even AI systems get overwhelmed, letting harmful material slip through the cracks.

Content Diversity

Text, memes, 4K videos, voice notes…each format needs different moderation tools. A TikTok dance video might hide violence in the background. Can your AI spot that while also scanning comments for harassment?

Contextual Ambiguity

“Let’s eat, Grandma” vs. “Let’s eat Grandma.” One misplaced comma changes everything. Sarcasm, regional slang, or cultural memes (like “based” meaning both “cool” and “extremist”) baffle algorithms.

Emerging Tech

Deepfakes can now mimic voices and faces perfectly. Generative AI pumps out fake news faster than detection tools can adapt making it difficult to moderate content on social media. On the surface, this may look real and innocent but these deepfakes may be spreading narratives at scale.

Freedom vs. Safety

Remove too much, and you’re accused of censorship. Remove too little, and you’re hosting illegal content. Should a post criticizing a government be allowed in Country A but banned in Country B?

Global Cultural Gaps

A thumbs-up emoji is positive in the US but offensive in Greece. Even colors spark debates—white symbolizes purity in some cultures and death in others, making perfect content moderation impossible.

Mental Health Toll

It’s not uncommon for human moderators to sift through some pretty heavy stuff. Former Facebook moderators report PTSD from constant exposure, making it difficult for platforms to ethically outsource this trauma.

Generative AI

The content moderation systems may not be trained on AI generated content, both algorithms and people. What may seem like a real post or actual news may in fact be produced by an AI generator.

Question: With these hurdles, is perfect moderation even possible? Smart systems blend AI speed with human judgment—while admitting they’ll never catch everything.

Here at AI or Not, we make moderation pretty close to perfect. Try AI or Not's AI detector today and detect harmful fakes before they take a toll on your brand.

How AI is Revolutionizing Content Moderation For Social Media

Traditional moderation tools are drowning.

Last year’s fake images had six fingers. Today’s deepfakes blink naturally and mimic voice patterns. Moderators used to be able to tell what's real or not but not so much anymore, So, how do you spot a scam when the scammers use your own tech against you?

It’s simple: fight algorithms with better algorithms.

AI detection tools have become the lifeline social platforms need to survive this chaos.

And that’s where we come in.

AI or Not Solves Social Media Moderation Challenges With Ease

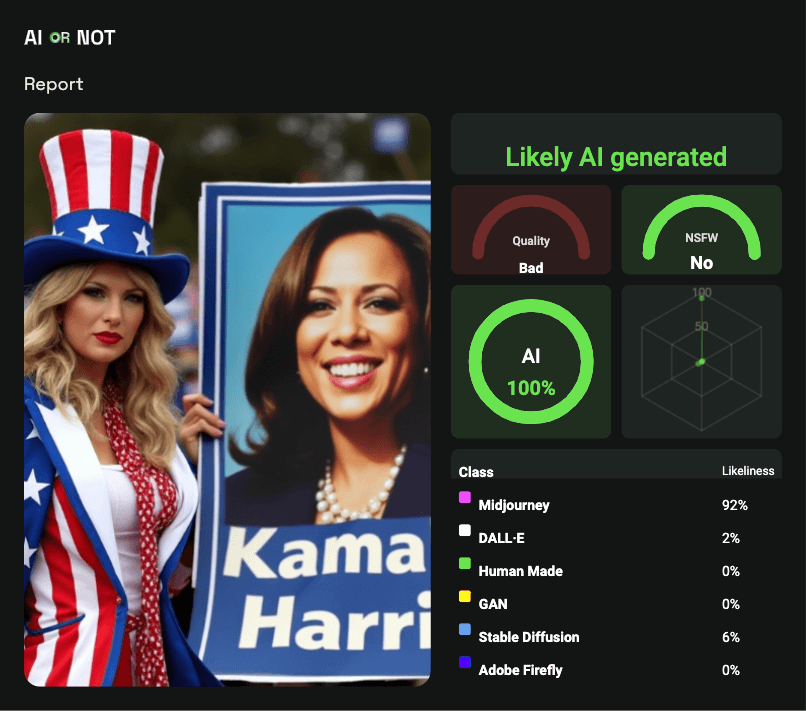

Here's the AI Detection Proof that Taylor Swift Did Not Endorse Donald Trump

Platforms now face synthetic content that evolves faster than rules can adapt. A viral Taylor Swift deepfake took X 17 hours to remove (before it already racked up 45 million views).

AI or Not tackles this gap with tools designed for speed and precision:

✅ Scans images in seconds, spotting fakes from models like Stable Diffusion

✅ Flags AI-generated faces that bypass human moderators

✅ Provides confidence scores (e.g., "92% likely AI") for informed decisions and a risk based approach for trust & safety teams

✅ Operates with zero data retention—content is deleted immediately post-analysis

Real Problems Where AI or Not Helps

Here's how AI or Not helps solve problems social platforms face on a day-to-day basis:

KYC verification: Blocks AI-generated selfies and identity documents

Trust & Safety: Flags photorealistic but synthetic content before it damages platform trust

Brand Protection: Identifies fake endorsements and impersonation attempts

Content Authenticity: Distinguishes between human and AI-created content

Election Integrity: Detects political deepfakes that could sway voters

Future of Social Media Content Moderation: What's Next?

The subsequent phase of content moderation isn’t about faster filters, it’s about smarter systems that anticipate problems before they explode.

Advanced contextual understanding:

Next-gen AI analyzes slang, sarcasm, and regional dialects for more accurate moderation.

Personalized moderation:

Future tools let users set individual boundaries. Parents might block gambling ads, while activists filter harassment keywords—all without universal censorship.

Transparent moderation:

Users hate mysterious bans. Tomorrow’s systems will explain exactly why the content was removed.

Cross-platform coordination:

Terror groups post the same propaganda everywhere. Shared threat databases let different social platforms nuke identical content globally—denying bad actors safe havens.

Regulatory evolution:

New EU laws demand 1-hour takedowns for terrorist content. AI will become compliance armor—automating reports and proving due diligence to avoid billion-dollar fines.

The Path Forward - Protecting Your Business in an AI-Generated World

How long would it take your current team to spot a harmful deepfake? 10 seconds or 10 hours? Detection tools are now critical infrastructure, your frontline defense against fake social media.

It’s time for businesses and brands to include moderation systems into their workflow. Train teams on emerging tactics, and integrate detection tools that learn faster than fraudsters.

Looking for a starting point? Use AI or Not.

Our tool flags fake content, images, audio, deepfakes, all in mere seconds, not several hours. With us, you can set custom thresholds, integrate via API, and delete data post-analysis to avoid privacy pitfalls.

The brutal truth is AI content grows smarter every minute. You either deploy detectors that evolve faster or let synthetic chaos erode your brand.

Get protected (and stay protected) today with AI or Not!

FAQs

What does a social media content moderator do?

Content moderators review user posts, comments, and media to enforce platform rules. They remove harmful material (hate speech, scams, explicit content) and escalate legal violations.

Example: A Facebook moderator deleting AI-generated fake news about elections or a TikTok reviewer blocking videos promoting self-harm.

What is content moderation with example?

Content moderation screens user-generated content to block harmful material. For example, Instagram uses AI to detect and remove deepfake nudes and YouTube automatically flags extremist propaganda in comments.

What are some best practices for content moderation?

Combine AI speed with human judgment for nuanced cases.

Update guidelines quarterly to address new threats like AI voice clones.

Use transparency reports showing removal rates/appeals.

Train moderators on cultural context (e.g., memes vs. harassment).

Deploy real-time detection tools for emerging scams.